Table of Contents

GenAI Is Squeezing Entry‑Level Contact Centre Roles

There’s been plenty of discussion about the impact of AI on those just entering the job market. The first rung in the career ladder is being removed according to job data.

Latest research from Stanford University’s Digital Economy Lab shows generative AI is beginning to reshape US employment, with disproportionate impacts on entry-level workers in AI-exposed occupations.

Specifically, early-career workers aged 22–25 in the most AI‑exposed occupations experienced a 13% relative decline in employment since late 2022. The point at which GenAI’s impact was first experienced. In contrast, the research showed employment rates for older workers in the same jobs and workers in less-exposed fields were stable or growing.

‘Highly exposed’ roles included customer service.

This caught my eye. So, I decided to explore whether these trends are likely to be replicated closer to home. And if so, what to do about it.

This new data adds focus and more evidence for the ongoing debate about ‘Augment versus Replace’. And on that point, the research offers an immediate clue from one of the six facts they surface from their analysis.

Fact 3 – Automation vs. augmentation: Declines concentrate where AI is used to automate tasks. In occupations where AI primarily augments work, entry-level employment holds up or grows.

At face value this is not news. Especially if you feel familiar with the ‘Augment versus Replace’ argument in relation to contact centre teams. However to dismiss it as such is to miss the broader context and the politics that surround the topic.

In a world full of clickbait discussion, decision makers are still being persuaded that Gen/Agentic AI has the power to sweep away all human value in its path. In doing so, ‘Augment versus Replace’ distinctions become blurred, placing entry level recruitment in jeopardy as the research indicates.

Just look around if you doubt the level of persistent confusion influencing decision makers. Especially those lacking enough foundation understanding of current AI capability to discriminate.

Here’s one thread in which senior figures in the market research community are punching back at the suggestion they are now irrelevant as a profession.

I’ve just read an apocalyptically worded post from a board level advisor proclaiming the steep decline in Gartner stock price (40%) was proof that their days are numbered since AI can replicate analyst insights in a matter of seconds. Despite multiple counter arguments, his ‘end of days’ mindset was unmoved.

These points of view remain commonplace. Whatever the motivations (vested interest, ignorance) they seed perceptions and influence decisions. In the context of a contact centre entry level workforce, this can mean the opportunity to augment is missed for the binary option of replacement.

Even if you personally believe the value of AI to a contact centre workforce is about augmentation, you still need to ensure this remains the narrative in the minds of those who can make opposite decisions. Clarity on the scope and value of AI remains a spectrum of opinion that will take years to become more closely aligned. If ever.

So my message is this.

- Assume nothing

- Proactively redesign the value of entry level colleagues

- Promote it as the way AI first organisations leverage human value.

Here’s an introduction to how you redesign the value of entry level colleagues. In doing this you will also evolve your overall operating model into one that intentionally supports and optimises AI-Human partnerships.

In other words, move from the fuzzy sentiment of ‘Augment versus Replace’ to operational clarity. Redesign how you develop a contact centre talent pipeline so that it dynamically aligns with AI’s expanding role and value.

Boosting the Value of Entry Level Colleagues

Before getting into the detail, let’s answer the relevancy question. Does a US study apply to the UK? There is evidence to suggest so.

A July 2025 review by McKinsey of AI’s uneven impact on the UK job market found sharper falls in high‑exposure occupations (38% drop) vs 21% for low‑exposure since 2022.

This is consistent with employers tempering demand where AI is expected to reshape tasks. A response pattern that is directionally consistent with US recruitment decision making.

That said, I’d also encourage you to tap into your own networks to refine understanding of what’s happening at sector and local levels.

Whatever you might learn, big picture trends tell us this.

Generative AI is compressing routine inquiry handling and shifting value to tacit knowledge, complex resolution, and orchestration work.

Therefore in order to protect early‑career pipelines and sustain performance, contact centres need to redesign recruiting, onboarding, and support to favour augmentation over automation. And rewire the operating model around human supervised workflows, skills mobility, and outcome-based management.

Here’s a set of Brainfood checklists to share with your team as a framework for co-creating your own response. Also check out the offer at the end of this article if you want access to the full workshop agendas they’ve been extracted from.

Recruiting

- Profile for augmentation, not replacement: Prioritise candidates with learning agility, situational judgment, and collaboration skills over narrow script adherence, because entry‑level employment is declining where AI automates codified tasks and is steadier where usage is augmentative and collaborative.

- Assess for tacit knowledge potential: Introduce scenario-based assessments that test ambiguity handling, escalation judgment, and tool‑coaching aptitude, reflecting the US evidence that tacit knowledge buffers experienced workers and that directive* AI usage raises substitution risk for novices.

- * When the AI takes over routine tasks completely and outputs final decisions or responses with little human input

- Hire for growth paths: Target diverse entrants with adjacent strengths (care, hospitality, claims) and make transparent pathways into complex queues, QA, knowledge ops, and AI‑ops, countering the narrowing of entry slots in high‑exposure roles by widening “on‑ramps” to augmented work.

Onboarding

- From scripts to systems thinking: Compress product/script training and front‑load “AI co‑work” skills – prompting patterns, verification, exception detection, and handoff discipline since employment holds up where AI augments rather than directs work.

- Tacit knowledge scaffolding: Pair each new advisor with a coach and structured case-replay routines that surface non‑obvious heuristics*, because older cohorts’ resilience is tied to tacit knowledge not replaced by AI.

- * The goal is to help new agents learn subtle, context-specific strategies i.e. the practical tips, judgment calls, and workarounds that aren’t written in rulebooks or easily automated by AI systems.

- Early complexity exposure: Rotate new hires through controlled complex cases with shadowing and debriefs to accelerate tacit accumulation and reduce dependence on automative AI patterns linked to entry‑level displacement.

In‑role Support

- Human supervised guardrails: Mandate verification steps for AI outputs on policy, refunds, and compliance; position AI as a “first draft” and require human improvement & validation for high‑impact actions, aligning with augmentation modes associated with better employment outcomes.

- Live mentoring and swarm support: Implement floorwalkers/virtual swarms to unblock novel cases rapidly, reinforcing tacit transfer and reducing the risk that directive AI handles routine tasks so efficiently that advisors may become over-reliant on it, rarely practicing independent problem-solving or developing deeper expertise.

- Skills telemetry: Track individual augmentation proficiency (prompt chains used, correction rates, resolution deltas with/without AI) and use it to personalise coaching ensuring that as routine work is automated, agents develop the unique human skills needed for specialist roles instead of being made redundant.

Role Design

- Redefine “entry level”: Refocus from simple query resolution to more advanced work: overseeing (orchestrating) how AI tools interact with customers, double-checking (validating) AI-generated output, and handling situations where AI cannot provide a satisfactory answer (exception handling).

- Dual tracks from day one: Create two visible progressions Complex Care – billing disputes, regulated complaints, tech troubleshooting) and Enablement – QA, knowledge curation, prompt library stewardship, bot‑tuning, so entrants see tangible futures beyond routine tickets.

- Outcome ownership: Shift KPIs from average handle time and adherence to blended outcomes such as first‑contact resolution, verified accuracy, effort scores, and lifetime value impact, which better reflect augmented work quality and reduce perverse incentives to over‑automate which can degrade quality and customer trust.

Operating Model Changes

- AI‑ops and Knowledge‑ops hubs: Establish small cross‑functional teams that own prompt libraries, guardrails, retrieval quality, and case pattern mining. This turns augmentation into a core, institutional capability rather than a sporadic, tool-driven activity. Use them as career pathways for progressing advisors in specialist and leadership roles.

- Queue architecture: Separate “automation‑first” queues (fully self‑serve with exception escape hatches) from “augmentation‑first” queues (human‑led with AI drafting/lookup), routing new agents preferentially to augmentation queues to accelerate learning and resilience.

- Supervisory model: Managers become enablement leaders ensuring tacit knowledge is systematically transferred from seasoned staff to new entrants, boosting resilience against automation risks: coaching on effective human-AI collaboration, reviewing verification quality, and collecting and sharing real examples of best practice.

- Workforce planning: Reduce bulk hiring of homogeneous entry roles; instead, hire fewer but higher‑trajectory entrants and invest in faster progression to complex/value roles, addressing the evidence of shrinking entry slots in exposed occupations.

- Governance and risk: Institute policy engines that flag when AI suggestions exceed authority limits and require senior review, ensuring human accountability and judgement remain central. Both now and as agentic level autonomous decision making are released into the market.

Learning and Progression

- Micro‑apprenticeships: 8–12 week sprints pairing entrants with senior advisors on specific complex themes (e.g., regulated complaints), with AI‑assisted case mining to select teachable moments – codifying tacit knowledge into playbooks while avoiding the trap of automating learning so much that advisors miss out on curiosity, judgment, and context.

- Certification for augmentation: Tiered credentials around prompting (how to instruct AI), retrieval hygiene (ensuring information sourced by AI is accurate and current), verification (reviewing and improving AI outputs), and “red-team” skills (identifying AI failure modes and edge cases). These credentials enable progress into more specialist roles.

- Feedback loops to product: Formalise pathways for agents to pass pattern insights and failure modes to product/engineering, creating value beyond ticket closure and justifying richer early‑career roles as routine work shrinks.

Metrics To Steer The Transition

- Augmentation quality index: It focuses not on how much work advisors complete, but on how well they improve and validate AI-generated responses. This indicates successful augmentation habits correlated with more resilient employment.

- Tacit velocity: How quickly advisors develop practical, experience-based skills like judgment, intuition, and contextual problem-solving as needed to handle complex customer cases without supervision.

- Progression health: Monitor internal fill rates for complex/enablement roles and time‑to‑promotion for entrants; healthy pipelines counteract the structural squeeze on entry‑level headcount in exposed occupations.

Why AI Technology Investment Alone Is Not Enough

The Case for Deliberate Augmentation Strategy

Simply investing in AI technology and assuming optimal workforce balance will emerge naturally is a costly strategic error that ignores the fundamental dynamics of how AI impacts employment patterns.

The Brynjolfsson research that triggered this article reveals AI deployment without intentional augmentation design leads to predictable workforce displacement: a 13% decline in entry-level roles where AI usage patterns become directive rather than collaborative.

This is because technology-driven transformation creates path dependency: self-reinforcing patterns that become increasingly difficult and expensive to change over time.

In this instance, organisations focussing solely on AI capability development inadvertently train their workforce to become passive consumers of AI outputs rather than active collaborators, accelerating displacement risk rather than building resilience.

Here’s the full dynamic:

- Directive path: Advisors become passive → their skills atrophy → they can only handle routine work → more tasks get automated → workforce becomes less capable and more vulnerable

- Augmentative path: Advisors become collaborators → their skills deepen → they handle complex exceptions → human value increases → workforce becomes more resilient and valuable

The evidence shows that employment outcomes depend not on AI sophistication alone, but on how AI is integrated with human work. This integration requires deliberate workforce strategy, skills development, and cultural change that pure technology investment cannot deliver.

Without proactive augmentation investment, even the most advanced AI deployment will systematically erode your talent pipeline, reduce service differentiation, and leave your organisation vulnerable to the next wave of automation advances.

The choice is not between technology investment and workforce development.

It’s between strategic augmentation that preserves human value alongside AI capabilities or accidental automation that hollows out organisational capability. Meanwhile competitors who invest in both technology and human-AI collaboration pull ahead in service quality, innovation, and market resilience.

The organisations that treat workforce augmentation as optional will find themselves with powerful AI tools operated by a diminished, demoralised workforce incapable of handling the complexity that drives competitive advantage and customer loyalty.

This issue becomes increasingly urgent as the current arc of AI capability continues to challenge traditional ways in which work is undertaken.

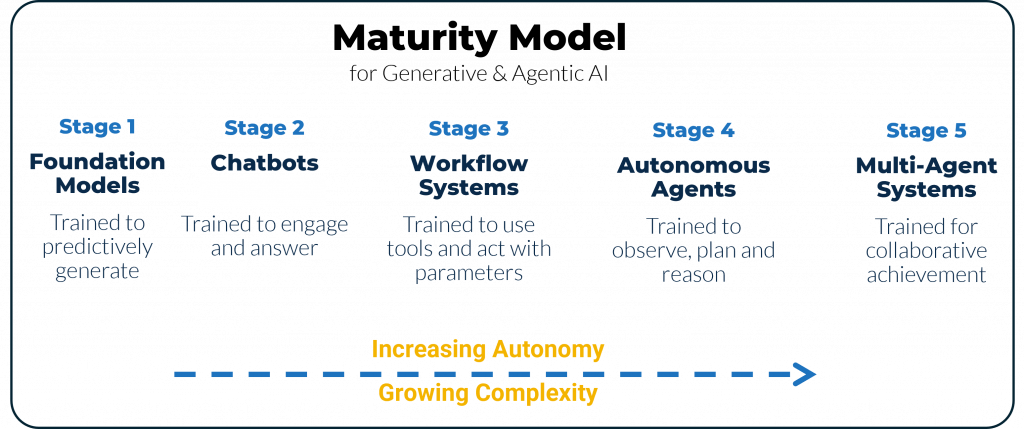

Right now we are entering the stage of AI workflow transformation which will significantly improve journeys outcomes and how they are orchestrated.

As just explored, the way these are designed matters. A tech only focus has clear downstream consequences.

In reponse to this emerging need, Brainfood is currently looking for advanced AI contact centre operations (most mindful of the issues explored in this article) to become early adopters of our ‘AI+People Augmentation’ Programme.

if you are potentially interested and want an exploratory discussion on what’s covered, how value is created and the way that the programme is structured, please let us know.